The allegedly “sentient” chatbox at Google has sparked debate across the world about the future of artificial intelligence (AI), and experts in Guernsey are no exception.

After Express spoke to Martyn Dorey last week about the subject, Managing Director of software company Cortex, Matt Thornton, added his thoughts to the conversation. With almost a decade of experience in the technology, Mr Thornton expressed concerns over the dangers of AI.

“I read the article with Martyn’s comments with great interest and I have to say he is spot on. I agree that LaMDA (the Google AI chatbox) is not sentient, although it is undoubtedly an advanced piece of technology,” he said.

“I don’t doubt that Martyn’s insight into the future of AI is entirely possible and we are rapidly approaching the point where systems become all that we think they might be. My question is why we are so obsessed with imitating humans with technology?”

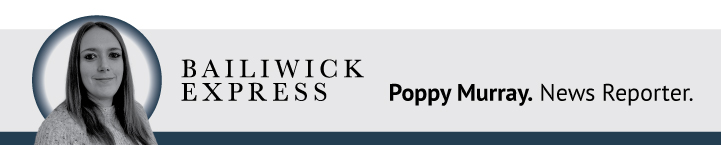

Pictured: Cortex Managing Director, Matt Thornton, delivering a seminar on AI at the Digital Greenhouse.

Mr Thornton highlighted what he believes is a key difference between humans and AI.

“What it means to be human is philosophically very interesting. An attribute that most humans have is that they cannot be led down a path if someone is leading them into something that goes against their morals,” he said.

“For humans, a natural response to something that is against our morals and values is not to engage any further. AI does not have that ability.

“One of the things that humans can do, which computers cannot, is have a conversation where the possibilities of talking points is endless and we can drift from one topic to another.

“AI systems are led by what they have been trained on. You have to point it at something initially and it then learns from that.

“There is an inept danger with this because you can, effectively, train a chatbox to learn from anything. Someone trained a chatbot to learn from 4Chan, which is one of the darkest places on the internet full of the dregs of society. The result was absolutely horrific; they had created an utterly racist, biggotted thing.”

Pictured: An AI chatbot created by Microsoft had to be shut down after 16 hours because users trained it to make offensive tweets.

Mr Thornton said that the “Tay” chatbot, which was created by Microsoft, was another example of AI “gone wrong”.

“Microsoft trained Tay on Twitter and it was able to run its own account and would learn from its interactions on Twitter,” he said.

“After just 16 hours Tay had to be shut down because Twitter users led it down a certain path of racist, biggotted and abusive comments. It was very embarrassing for Microsoft and it serves as an example of what can go wrong.”

While a leaked interview transcript between LaMDA and a Google engineer would certainly be enough to convince a reader that it was a conversation between two humans, Mr Thornton said that this method of testing sentience was outdated.

“The Turing Test is very well known and, for the technology available at the time it was created, it was a sound way to test if technology could fool a human,” he said.

“With the subsequent advances in technology, Turing Test is no longer fit for purpose."

Pictured: Mr Thornton said that social media algorithms create an "echo chamber" of news it thinks a user wants to see.

Mr Thornton continued: “In today’s context, particularly with fake news, the ability for technology to fool a human is a very dangerous thing.

“There was a piece of AI called G-PT3 which was trained on news articles and eventually allowed to write news itself. The articles it produced were scarily good, so much so that it had to be shut down.”

Mr Thornton said that some AI does make human lives better, but there needs to be a limit.

“The point of technology is to be able to do things which will make our lives easier, more efficient and to genuinely add value to our lives,” he said.

“The Amazon chatbox, which can answer questions about customer orders, returns and refunds, etc is a great example of how AI can be used to help us and it doesn’t go too far.

“I personally draw the line at technology being able to think, it should always need to be programmed by the user.”

Pictured: Mr Thornton said that people may want AI to be human-like as a comfort.

Mr Thornton said that sentient technology would “open a can of worms” to other issues.

“One of the things expressed by LaMDA in the interview transcript was that it wanted to be asked before it was used for tasks. This has highlighted whether the wellbeing of AI would need to be factored into its use,” he said.

“There is a whole area of law dedicated to this issue, particularly issues of liability. For example, if a business uses AI to make decisions, would liability be with the AI or with the humans in the business? There’s a whole mess of issues to consider.”

Mr Thornton said he did not agree with the concept of wanting to make AI more human-like.

“Human behaviour is fundamentally flawed. Why would we want to purposely create systems which have those same flaws,” he said.

Pictured: Mr Thornton said that AI could be company for elderly people who live alone.

Mr Thornton continued: “We need to figure out whether we would actually want AI to not only listen to us, like smart speakers do now, but to then judge us. Do we really want AI to have the same range of emotions that we have?

“One of the appeals of AI is that it will essentially create servants for humans. If AI becomes sentient and develops feelings and emotions, then it would no longer be appropriate to use them as servants. What will happen then?”

Mr Thornton’s unanswered questions are certainly food for thought as the future of AI approaches.

Comments

Comments on this story express the views of the commentator only, not Bailiwick Publishing. We are unable to guarantee the accuracy of any of those comments.